Future of AI – Google’s New AI Model Gemini

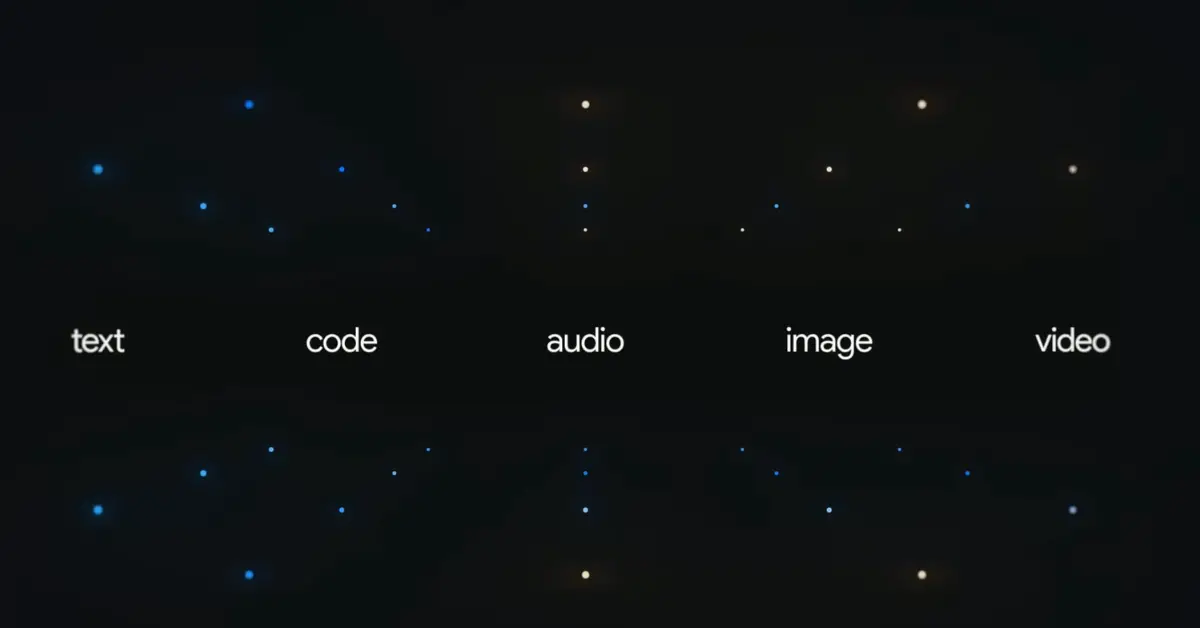

The realm of artificial intelligence witnessed a paradigm shift on December 6, 2023, with Google’s unveiling of Gemini. This groundbreaking AI model introduces a multifaceted approach to understanding and processing information across various modalities. From text and code to audio, images, and video, Gemini promises a revolutionary leap in AI capabilities.

What is Gemini AI?

Gemini is the latest artificial intelligence (AI) innovation introduced by Google and DeepMind. It represents a significant advancement in AI technology by being designed as a multimodal AI model. Unlike previous AI models that were primarily focused on handling text-based information, Gemini is purpose-built to seamlessly handle various types of data, including text, code, audio, images, and video. This unique characteristic enables Gemini to comprehend, process, and generate responses across different forms of information simultaneously.

Understanding Gemini’s Models

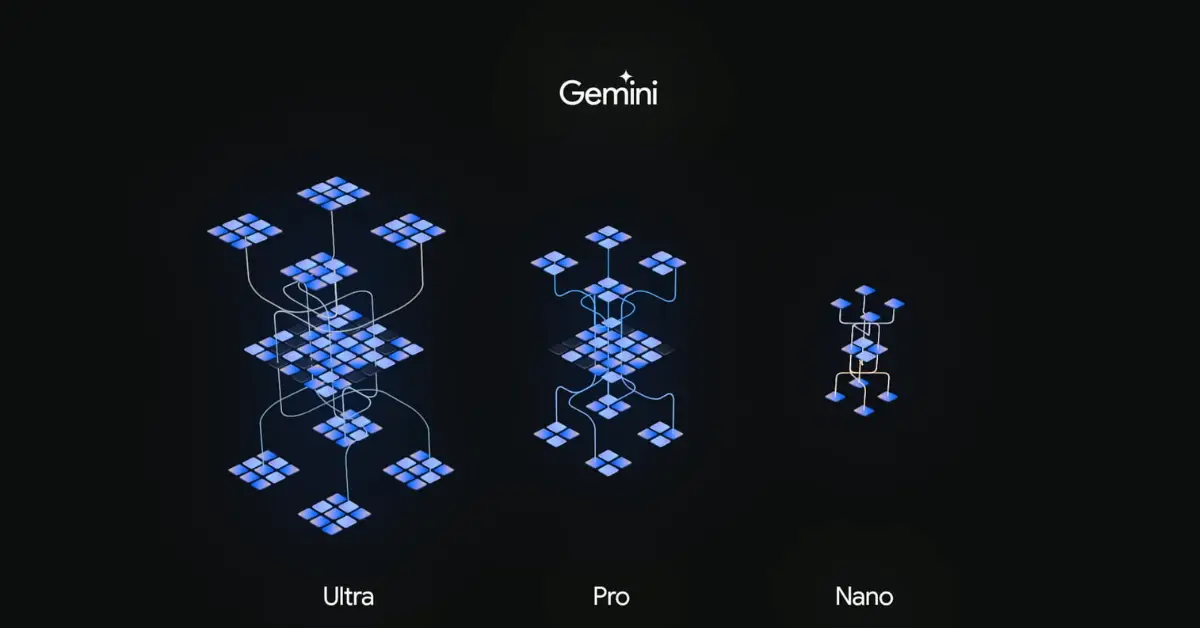

Gemini 1.0 presents three distinct models:

- Gemini Ultra: The largest and most capable model, designed for tackling complex tasks. It outperforms GPT 4 in most benchmarks but is not yet available to the public.

- Gemini Pro: The most versatile model, currently accessible in Bard and Pixel 8 Pro. It offers a balance between performance and accessibility.

- Gemini Nano: The most efficient model, designed for on-device tasks on mobile devices.

Each model caters to specific tasks, with Ultra standing as the largest and most capable, while Pro, currently accessible, parallels the capabilities of GPT 3.5, found in the free version of ChatGPT. Nano, optimized for on-device tasks, especially targets mobile applications.

Current Applications of Gemini:

- Bard: The popular AI assistant Bard has been upgraded with Gemini Pro, offering users improved text-based functionalities.

- Pixel 8 Pro: Google’s latest smartphone leverages Gemini Nano for features like summarizing recordings and enhancing Gboard’s smart reply.

- Google Search: Gemini is already being used to enhance the generative experience within Google Search.

Future Availability:

- Gemini Pro API: Starting December 13th, 2023, developers will be able to access and utilize the capabilities of Gemini Pro through an API.

- Gemini Ultra: The broader release of Gemini Ultra and its full potential are highly anticipated, expected sometime in the coming year.

Unveiling Multimodal Prowess

What sets Gemini apart is its foundational design for multimodal functionality, allowing seamless comprehension and integration across various forms of information. Unlike predecessors primarily trained for text, Gemini inherently operates across multiple modalities, enabling unprecedented synergies between diverse data types.

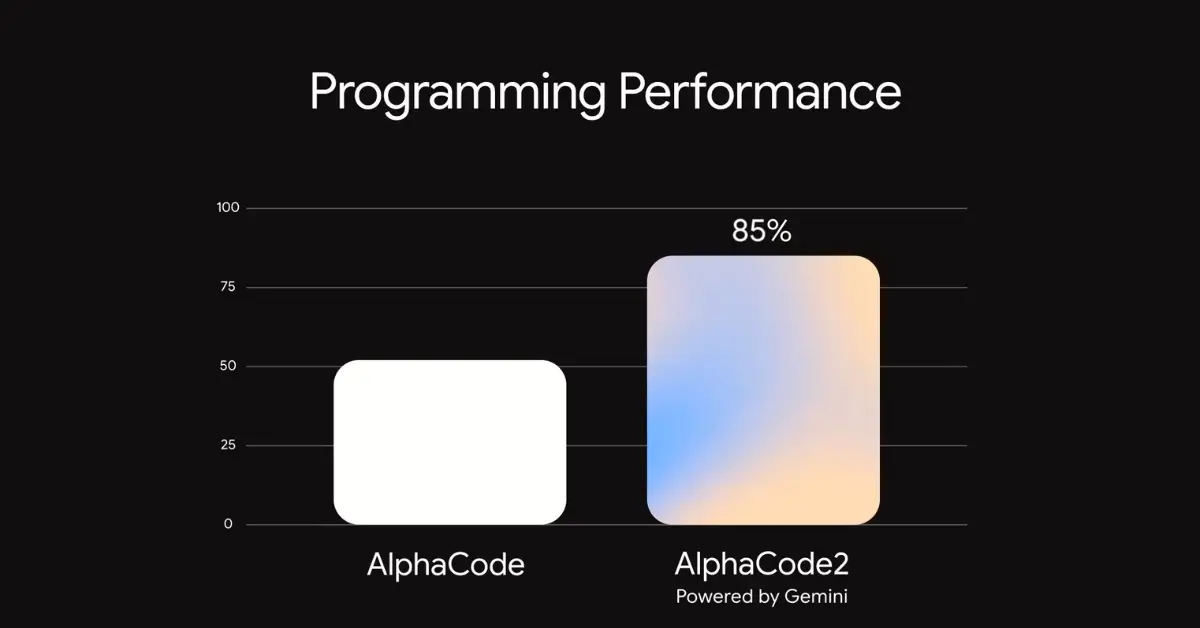

Benchmarking Success: Gemini Ultra Vs ChatGPT-4

In benchmarking tests, Gemini Ultra outperformed GPT-4 in several domains, showcasing superior reasoning abilities in complex tasks. It excelled in vision-based challenges, audio translations, and logical responses generated from visual prompts, emphasizing its expansive capabilities.

Practical Applications of Gemini

Gemini’s applications span diverse tasks, from interpreting handwritten math problems to multilingual translation and even generating code in multiple programming languages like Python, Java, C++, and Go. Its adaptability in creative tasks, transforming visual inputs into code snippets, positions Gemini as a versatile tool for developers.

Limitations and Ethical Considerations

Despite its promising features, Gemini faces initial limitations such as the absence of image generation, a feature expected in subsequent updates. Moreover, Google’s guarded stance on disclosing training data sources raises ethical queries, emphasizing the need for transparency in AI development.

Rollout and Future Prospects

The rollout of Gemini 1.0 across Google products signals an imminent shift in AI integration. While Gemini Pro showcases advancements over predecessors, the forthcoming launch of Gemini Ultra promises unparalleled AI capabilities, reshaping the landscape of AI-driven solutions.

Interacting with Gemini – Video

Conclusion

As anticipation builds for the imminent release of Gemini Ultra, the AI community eagerly awaits its full potential. Gemini’s integration of various data modalities paves the way for enhanced user experiences, problem-solving capabilities, and innovative AI-driven solutions.

FAQs

How does Gemini’s performance compare to previous AI models like GPT-3.5 and GPT-4?

Gemini, particularly the Ultra and Pro versions, exhibits noteworthy performance improvements over predecessors like GPT-3.5 and GPT-4 in various benchmarks. It demonstrates superior capabilities in tasks such as reasoning, image recognition, code generation, and natural language understanding, showcasing advancements in multimodal AI technology.

How does Gemini impact existing AI-powered platforms like chatbots and text-based AI models?

Gemini’s introduction signifies a significant leap in AI capabilities, especially in handling various types of data simultaneously. Its integration into platforms like Bard and other Google products hints at a potential shift in the AI landscape. While Bard, leveraging Gemini Pro, has shown notable improvements, the full potential lies in the upcoming release of Gemini Ultra, which is anticipated to reshape the AI paradigm by offering advanced multimodal capabilities.